The Problem With AI Is Shitty Human Beings

The problem with AI isn't going to be Skynet. It's going to be amoral extraction class assholes applying half-cooked automation at scale onto deeply broken sectors in exploitative ways in a country too corrupt to have functioning regulators.

If you've wandered into the online discourse surrounding "AI" lately, you may have noticed that it's gotten somewhat, uh, heated.

The biggest current ideological collision is between developers warning of rapid, useful, and transformative advancements in software automation, and folks justifiably incensed that those advancements will arrive alongside a gargantuan, historic ratfucking of creatives, labor, the environment, and democracy itself.

If the former wanders onto platforms dominated by the latter (like Bluesky) to suggest some of the hype may be warranted, they're inevitably bombarded with reminders of the very real human costs of an automation revolution run by people who have enthusiastically cozied up to fascism.

This has resulted in a fussy consensus among AI fans that they "can't have an open conversation about AI with the left" because they might be reminded, in some narrow corners of the internet, that the tech companies dominating AI's trajectory are helping the planet's shittiest people build a badly automated murder autocracy.

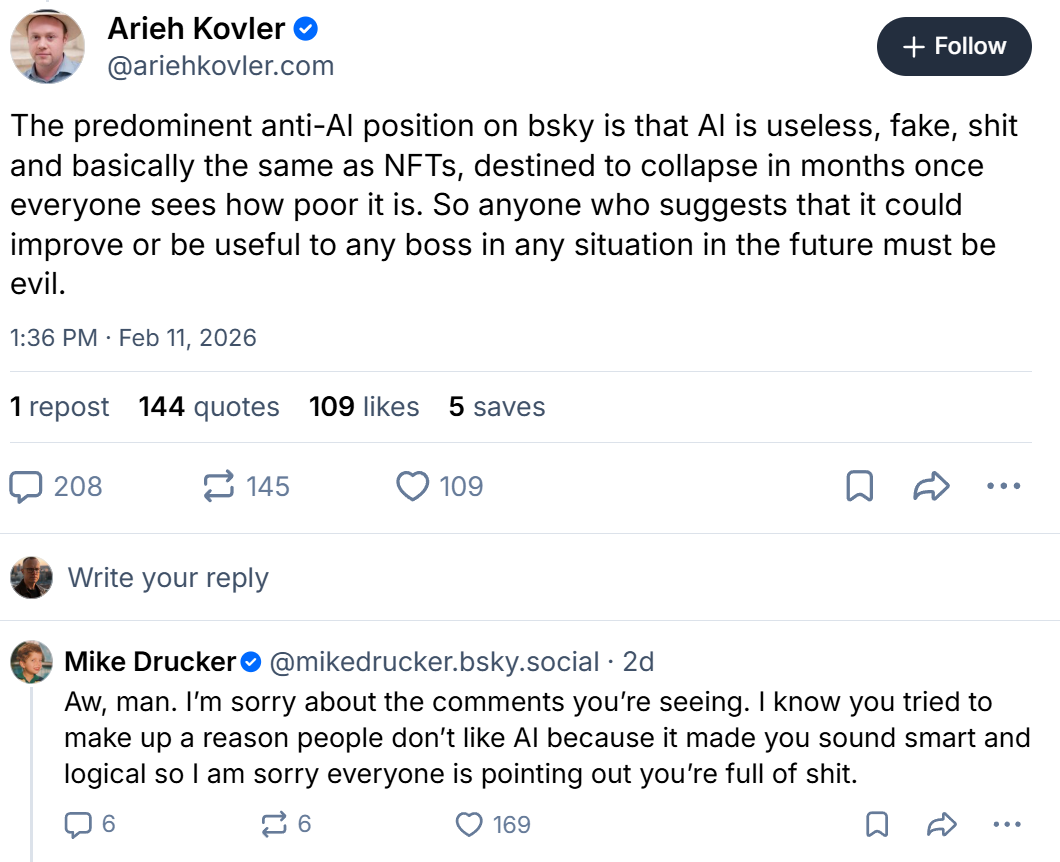

As a result I'm seeing a lot of exchanges online like this:

To be clear, AI advocates have no limit of places (both real and virtual) to happily discuss the way they feel large language models (LLMs) are improving their daily productivity and reinvigorating their love of computing.

Most of the internet (see: this Clawdbot TikTok trend), and most of the media (see the constant barrage of "CEO said a thing!" journalism), is utterly dedicated to propping up the more corporatist #hustlebro visions of AI's impact.

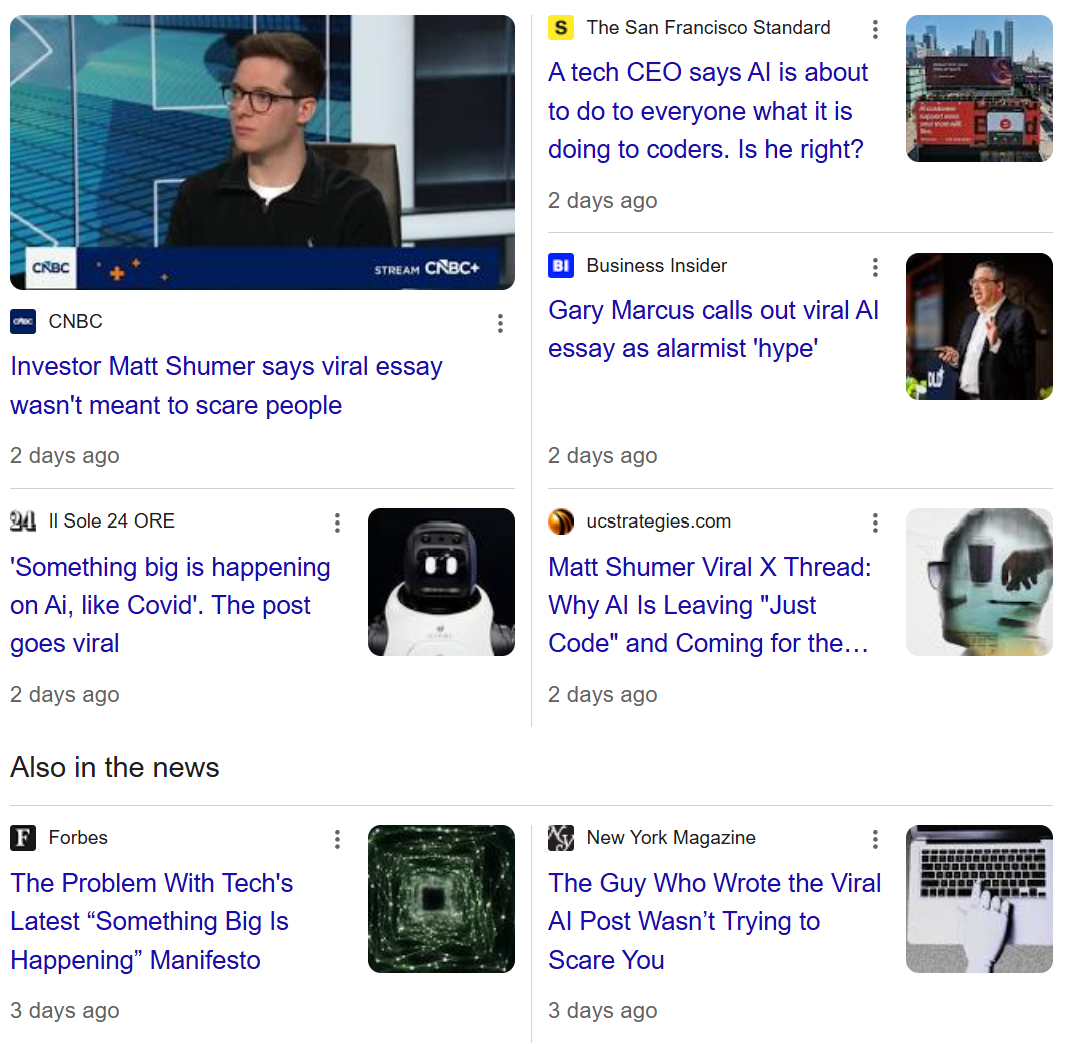

This post from last week by AI industry CEO Matt Shumer is one such example. The post's purportedly "chilling" message went viral online generating 50 million views, quickly becoming an entire news cycle.

He outlines how rapidly the technology has been advancing, how much they've improved AI quality from the early days of the Will Smith spaghetti incident, and how the AI systems you laughed at just six months ago are not the same models being trained today, requiring AI critics to continually reassess their priors:

I am no longer needed for the actual technical work of my job. I describe what I want built, in plain English, and it just... appears. Not a rough draft I need to fix. The finished thing. I tell the AI what I want, walk away from my computer for four hours, and come back to find the work done. Done well, done better than I would have done it myself, with no corrections needed. A couple of months ago, I was going back and forth with the AI, guiding it, making edits. Now I just describe the outcome and leave.

With the caveat that this is a person that sells and invests in AI systems for a living, I do think that post is worth a quick read to better understand the generational advancements in software automation being made, or, at least, how the developers of these advancements view themselves.

That said, if read through Shumer's post, you'll notice something curious: all of the most problematic issues surrounding AI simply aren't mentioned. Either because his financial self-interest doesn't allow for honest acknowledgment of them, or because he simply doesn't find those aspects all that interesting.

I think this sanitized ethical, societal and political myopia is where a lot of the "I'm not allowed to talk about AI" blowback originates. You will start to notice a certain thematic consistency among the most bullish AI advocates: a hyper-personalized fixation on personal productivity gains to the occlusion of all else. Including factual reality, ethics, and foundational empathy.

There's no mention of Elon Musk's AI data centers generating pollution illegally aimed directly at the city's minority populations. There's no mention of how the immense power consumption of AI has resulted in companies discarding their already tepid-climate goals, threatening foundational human existence, something that (as always) will hit the vulnerable and marginalized the hardest.

There's no discussion about how the extraction class sees AI as central to their plans to destroy organized labor, or that rushed AI adoption in fields like journalism have been profoundly disastrous both in the degradation of existing journalism and the propping up of cheaply-produced autocratic propaganda.

There's no acknowledgement that many of these services and systems simply aren't profitable, or that the entire AI economy is currently being propped up by an undulating ouroboros of dodgy financials that, should it unravel prematurely, is likely to trigger potentially-catastrophic ripples across the entire economy.

Similarly, you'll find there's frequently little acknowledgement that some of the productivity gains from AI appear to be illusory.

56% of CEOs questioned in a recent PwC survey stated that AI hasn't produced revenue or cost benefits for their businesses to date. One recent Stanford study found that a greater prevalence of AI-generated slop has, in reality, made a lot of peoples' jobs harder because they now have to waste a lot of time sorting through mountains of useless cack generated by shortcut-seeking coworkers who believe they've revolutionized their workflow, dude.

Will those metrics shift as the technology evolves? Maybe! Should they be central to any honest conversations about our looming AI revolution? Absolutely. They certainly weren't proportionately represented in the massive media attention Shumer's blog post received last week.

Recently we've seen several key AI researchers leave the sector in ethical protest, openly noting that they're witnessing first hand the lack of meaningful corporate guardrails in AI development, or the active suppression of research critical of the technology's rushed implementation.

A few years ago you might recall that OpenAI's original board was broadly concerned with the fact that OpenAI founder Sam Altman was a terrible fucking person with all manner of problematic global conflicts of interests. The industry, and by extension the tech press, collectively decided to dismiss the entirety of those concerns as the rantings of hyperbolic lunatics.

When strong AI proponents from within the industry do pop up with what passes as informed ethical concerns, it's very often based around the belief that modern LLMs will suddenly and miraculously become sentient and haphazardly decide to wipe humanity from existence:

Artificial intelligence keeps getting smarter — and soon, warns former Google CEO Eric Schmidt, it won’t take orders from us anymore.

This idea that current LLMs are just a few skips (and another fifty billion in taxpayer subsidies) away from true sentience just isn't supported by factual reality. This sort of doomerism serves simultaneously as marketing to overstate what LLMs are actually capable of, and redirect press and public attention away from some the very ordinary human failures dominating AI development.

That's not to say there isn't plenty to worry about given the close tethers between tech companies (who long ago forfeited any benefit of the doubt on their ethical integrity), military contractors, and our current reigning psychopaths incapable of hiding their erections about automated mass surveillance and murder.

But to my eyes, the problem probably isn't going to be Skynet, the problem will center around letting amoral extraction class assholes apply half-cooked automation at scale onto deeply broken sectors in exploitative ways in a country too corrupt to have functioning regulators. The very human banalities of AI evil.

Check out, for example, this story about how UnitedHealth – the same company whose CEO was murdered in the street for predatory capitalism – implemented AI systems with a 90 percent error rate to wrongfully deny critical health coverage to elderly patients, then ambiguously blame "the system" for very human choices.

To be clear, AI may wind up being transformative and part of an economy-shaking bullshit bubble simultaneously, and there are a lot of people from colliding cross sections of society (labor, development, activism, civil rights) certainly talking over and under each other across wide swaths of expertise and experience.

But folks annoyed by online animosity toward AI need to remember it's an extension of justified rage at the extraction class' grotesque enabling of the literal destruction of democracy. That rage is going to prove extremely helpful in the months and years to come, and deserves a wide berth.

Even if one concedes that language learning can be transformational, the grand vision of modern automation's benefits can never materialize if its stewards are foundationally fucking terrible human beings disinterested in the contours of empathy. If we're not talking prominently about that, we aren't really talking at all.